How we selected the starter set of resources, and curated ‘Our Selection’

Margaret

July 17, 2020, 8:25 a.m.

It is part of our mandate within the EU-Citizen.Science project to take stock of the vast number of existing tools, guidelines and other materials available in and for citizen science, and to highlight, curate and organise those that are high quality and represent best practice in the field. This is at the heart of the second objective for our project, to CONSOLIDATE the citizen science knowledge base and celebrate outstanding practices and state of the art in citizen science in Europe.

Naturally, as we launch the alpha version of the platform (which is the first objective of our project), we also want to have a great starter set of resources available for the wider citizen science community to browse and find, that serves as a good example of the types of resources and projects we are asking people to add and share.

We therefore took the opportunity to test the quality criteria that we developed for the resources (See: ‘How we developed the quality criteria for resources’) while collecting a starter set of resources for the alpha release of the platform. As we did so, we asked all of the members of the consortium to add a Gold Star to those profiles that should be included in our own curated selection of best practice resources.

We first started with a set of resources that were suggested by the consortium members during our project kick-off meeting in Berlin, then a set of resources that were collected through the WP5 Training Needs Survey, and then asked for any additional resources to be added by consortium members as part of our WP3 quality criteria development process.

Using a Google Form to fill in all of the mandatory and optional resource profile information, we then also used the same google form to thoroughly go through the entire quality criteria moderation process (shown in the table below) for our own starter set. The specific criteria for resources were thus assessed against a 5-point scale, and all resources that exceeded the 50%-of-total-possible-points threshold are included in the online starter set.

Step 1: Overarching Criteria |

Criterion 1 (Required): The resource is about citizen science or relevant to citizen science |

|

Criterion 2 (Required): The resource has the following metadata: Title of the Resource URL Abstract Resource category (i.e. guideline, tool, training resource, etc) Resource audience Keywords Author (or project, or leading institution) Language Theme (i.e. engagement, communication, data quality, etc)

|

Criterion 3 (Suggested): The resource engages with the 10 Principles of Citizen Science |

Step 2: Specific Criteria |

Access to the resource | 1) The resource is easy to access (eg. registration process)? Strongly Agree (e.g completely open, no registration, such as a youtube video) Agree (e.g. optional registration or one click access to the resource through a social media registration) Neutral (e.g. average, undecided) Disagree (eg. filling in a registration form) Strongly Disagree (e.g complex registration process such as multiple steps to register or paid registration)

|

Readability and Legibility | 2) The resource is clearly structured according to the type of the resource (eg. if a scientific paper or a report, it includes an introduction, methodology, results, discussion and/or conclusions; or if a methodology document, it includes an introduction, audience description, step by step methodology, and an example)? Strongly Agree Agree (Clearly structured but e.g. the discussion doesn’t reflect the introduction) Neutral Disagree Strongly Disagree (Not clearly structured, very difficult to follow)

3) The resource has a clear language (eg. it is easy to read and understand for the intended target audience and it is concise - for example, if the intended user is a general audience, it is free from ambiguity, rare words and jargon - and even when they need to be used, their meanings are explained clearly)? Strongly Agree Agree Neutral Disagree Strongly Disagree

4) The resource pays attention to basic formatting (e.g. titles, paragraphs and references are easy to capture; grammar and spelling is correct; legible font and sufficient font size is used)? Strongly Agree Agree Neutral Disagree Strongly Disagree

|

Content | 5) The resource clearly describes its aims, goals and methods? Strongly Agree Agree Neutral Disagree Strongly Disagree

|

Applicability | 6) The resource is easy to implement (it touches on how the resource could be implemented and the context that it could be useful and it provides recommendations for its further use)? Strongly Agree Agree Neutral Disagree Strongly Disagree

7) The resource is easy to adapt to different cases (it explains the limitations of the resource and the context that it could be useful and it provides guidelines or recommendations for its adaptation to different cases)? Strongly Agree Agree Neutral Disagree Strongly Disagree -

|

Object | 8) If the resource is an audio object, it is clearly audible (no interruption, no background noise, etc.)? Strongly Agree Agree Neutral Disagree Strongly Disagree

9) If the resource is a video, an image or illustration, the quality is good enough (e.g. clear and sharp)? Strongly Agree Agree Neutral Disagree Strongly Disagree

|

Step 2 - Supporting Criteria |

Evaluation | 10. Was the resource used or is it currently being used in the context of citizen science or in a relevant initiative? (This could be answered based on the knowledge of the moderator/s and if the resource itself mentions this.) Yes, used with positive outcomes Yes, used with negative outcomes Yes, but the outcomes are not available or not known No, the resource has not yet been used in practice Don’t know

11. Has the resource been evaluated before in terms of the content, methods and results? (This could be answered based on the knowledge of the moderator/s and if the resource itself mentions this.) Yes, evaluated with positive results Yes, evaluated with negative results Yes, evaluated with mixed results Yes, the results are not available or not known (no score - supporting argument) No, not evaluated Don’t know

|

Impact | 12. Does the resource refer to an impact (e.g. on science, policy, society, etc.) it had in the past and/or is currently having and/or it could have in the future? Yes, (reason to support the inclusion decision, if the result is good) No, (reason to exclude as this is a supporting criteria, but is just for info) Don’t know

13. If the resource refers to an impact, has this been measured somehow? Yes, (reason to support the inclusion decision, if the result is good) No, (reason to exclude as this is a supporting criteria, but is just for info) Don’t know

|

Final Step - Our Selection |

Gold Star | Would you like to give this resource a gold star, for inclusion in the Curated List on the platform? Yes - this is a Gold Star resource, that should definitely be featured No - this is just a normal good resource

|

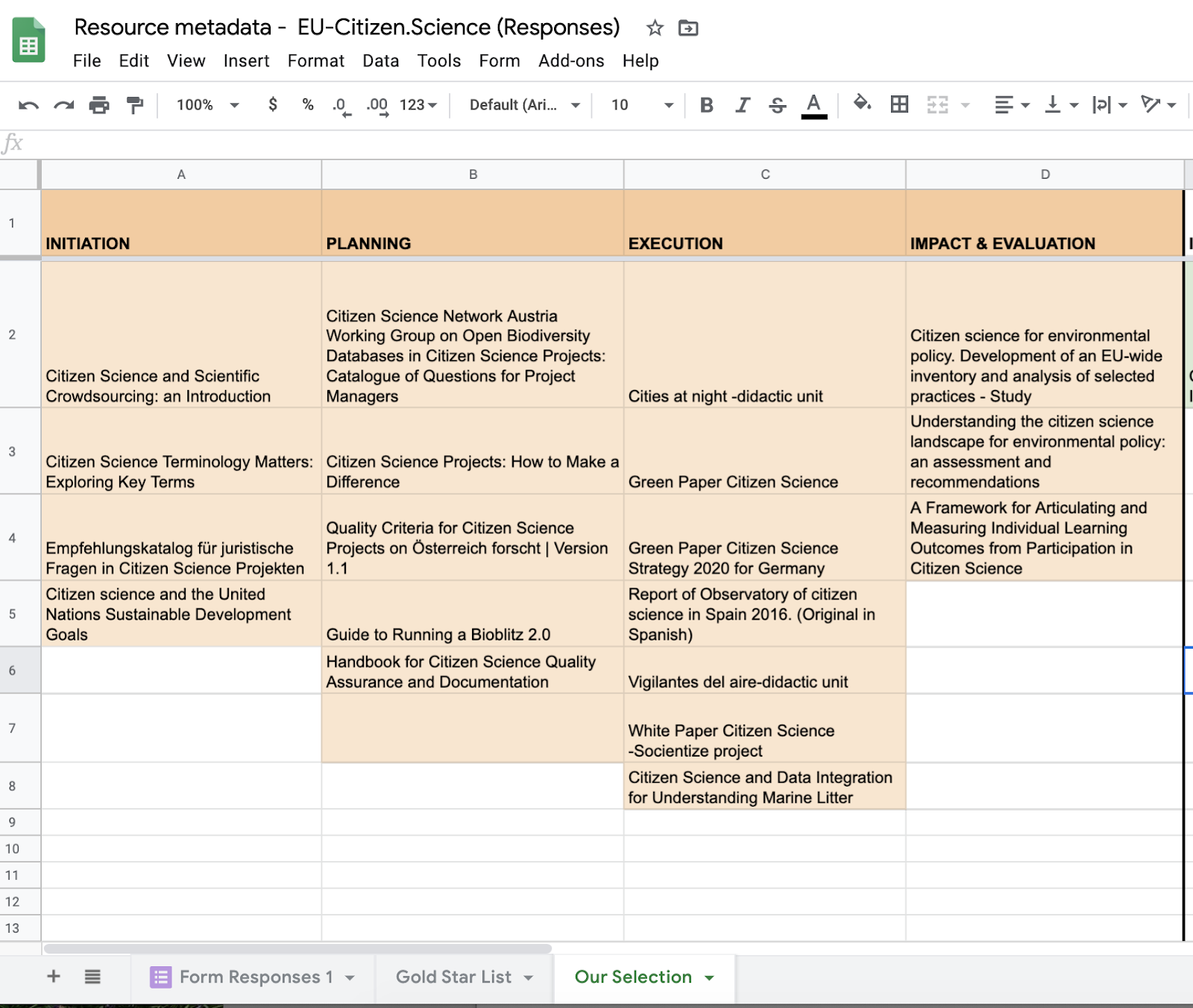

The resulting spreadsheet of good quality resources was then sorted to highlight those that had been given a Gold Star, and those were further organised according to their ‘theme’. These indicate the stage of the citizen science project lifecycle that the resource relates to.

To keep the structure of the Our Selection page on the website easy to see and navigate we have used the 4 main stages of any project as our categories for organising the ‘Gold Star’ resources, namely Initiation, Planning, Execution, and Impact & Evaluation (more frequently called Closure in project management texts).